Are Chatbots really the best way to interact with Large Language Models?

The Promise and Challenges of Conversational AI as a Tool of Thought

Conversational interfaces powered by artificial intelligence hold great promise as potential new “tools of thought” - interfaces that can augment human cognition in powerful ways. Yet, the road to actualizing this vision isn’t without its bumps. Simply inserting an AI chatbot into an existing workflow does not automatically make it an effective cognitive tool. Careful design, underpinned by a keen understanding of both human cognition and AI’s strengths, is paramount.

A core challenge lies in the technical limitations of today’s large language models. Their restricted context window sizes hamper maintaining coherence and references across long, multifaceted dialogues. Although innovative solutions like external persistent memory stores and prompt engineering are on the horizon, fully transcending these context boundaries remains a topic of fervent research.

While the challenge of refining AI’s technical capabilities is significant, there’s another equally important aspect: its integration into established human workflows. This becomes particularly evident when we bring GUIs (Graphical User Interfaces) and LUIs (Language-based User Interfaces) into the equation.

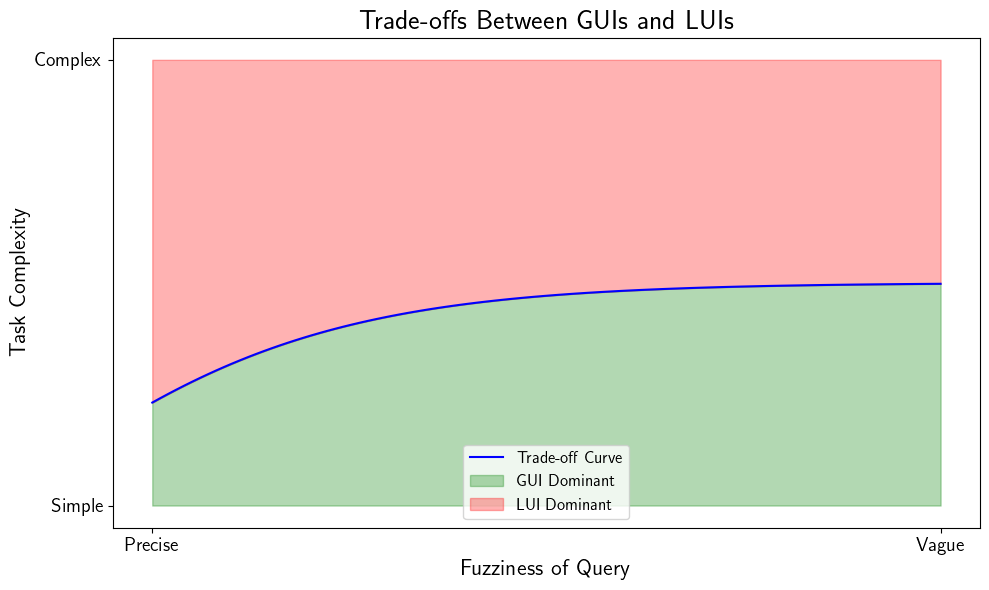

GUIs are ideal for precise tasks with well-defined outcomes. They’re structured, sequential, and streamlined. LUIs, on the other hand, excel when the desired outcome is more ambiguous or the task demands a conversational approach. They’re flexible, fluid, and open-ended. The key isn’t to champion one over the other, but to find harmonious integration. There is clearly a trade-off between the usage of a GUI over a LUI within a product or tool. We can intuitively sketch out a curve between the two, as shown in the figure above.

The two axes I have defined above are the “Fuzziness of Query” and “Task Complexity”. The former is a measure of how precise the query is. For example, “What is the price of AAPL?” is a precise query. On the other hand, something like “What factors will influence the price of AAPL over the next year?” is rather vague question. The latter is a measure of how complex the task is. Complexity here is not defined in terms of the number of steps that need to be followed in order to get to the answer. I am encompassing the idea that we don’t, à priori know what are the steps to be followed, or at the very least, there is some level of exploration involved.

For complex tasks, the GUI method has been to reduce the space of possible interactions by limiting the kinds of interaction a user has access to. This is done by designing the GUI in such a way that the user can only perform a certain set of actions. This is a very effective way of reducing the complexity of the task. However, it also means that the user is limited in the kinds of questions they can ask.

LUIs, on the other hand, are much more flexible. They allow the user to ask a wide variety of questions. But the chat-bot interface, to my mind, is still limited. It is still a linear progression of questions and answers. The user cannot explore tangents or digressions.

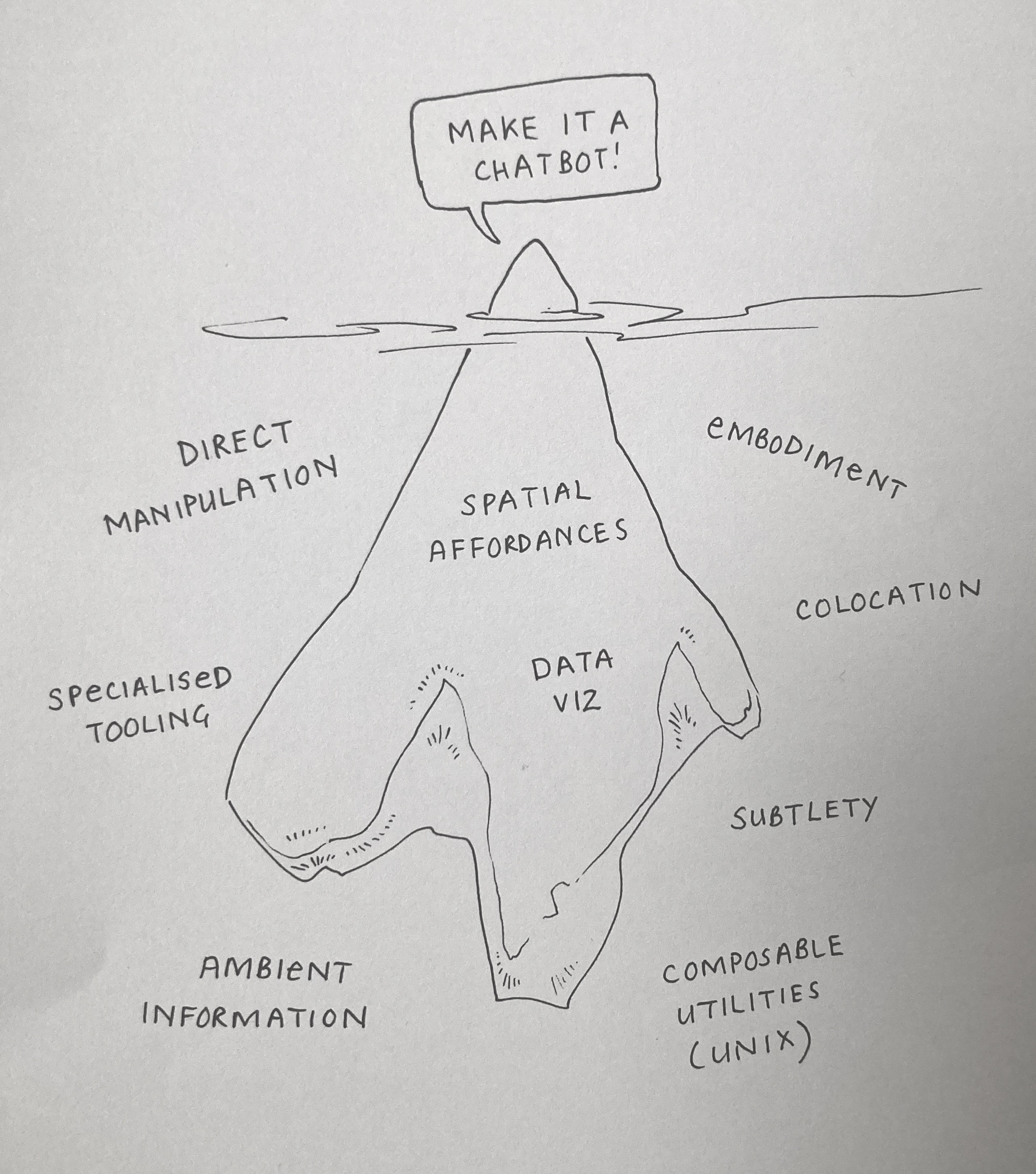

The sketch above (from Maggie Appleton) is a great way to visualize the current state of affairs. In a companion blog-post, from which this image is taken, she goes on to show us mock-ups of different modes of interaction with LLMs.

Branching Conversations: The Untapped Potential of Conversational AI

Drawing parallels with tools we’re familiar with can sometimes cast new light on seemingly unrelated areas. Consider Jupyter notebooks – a tool revered by data scientists and researchers alike. At its core, Jupyter embodies a linear progression: each cell of code is executed in sequence, often building on the results of the previous. It’s structured, sequential, and streamlined.

Yet, the human mind isn’t always linear. Our thought processes are intricate, branching out in multiple directions, exploring various tangents, and occasionally circling back to revisit earlier ideas. Just as rivers have tributaries, so too do our conversations have digressions. And it’s in these meandering tributaries that we often stumble upon the most profound insights.

Conversational interfaces of today, in many ways, mimic the linear progression of tools like Jupyter. You ask a question, get a response, and then move to the next query. But what if you wanted to explore a tangent? Or loop back to an earlier point after gaining a new insight? Most chatbots and conversational tools stumble here. Their designs don’t adequately support the branching, meandering nature of genuine human dialogue.

Here is a demo of where my mind is, again from Maggie Appleton.

This gap represents an exciting frontier for Conversational AI. Imagine an AI interface that not only allows but encourages multi-threaded conversations. A tool where users can digress, explore side topics, and then seamlessly return to the main thread of discussion. Such an interface wouldn’t just mimic human conversation; it would elevate it, adding layers of depth and dimension that current tools can’t offer.

This direction promises not only improved user experience but also the potential to uncover deeper insights through the serendipity of meandering discussions. By pushing the boundaries of Conversational AI beyond the linear and into the realm of the branching, we move closer to unlocking its full potential as a genuine “tool of thought.”

Industry practices as “Tools of Thought”

In many industries, like wealth management, professionals have honed their expertise using GUI tools like Excel. Introducing new interaction modalities, particularly voice and text, can, therefore, be jarring. This is where the delineation between GUIs and LUIs becomes crucial. While GUIs provide a structured path ideal for precise tasks, LUIs excel when the desired outcome is more ambiguous or the task demands a conversational approach.

Facilitating easy switches between these modalities not only offers flexibility but also encourages users to compare, adapt, and gain comfort with the new AI-driven interfaces. Observing when AI dialogues prompt novel questions or insights can shed light on their value in enhancing cognition. The North Star? A synergy that marries the distinct strengths of AI with human creativity.

The potential advancements in conversational AI present us with a thoughtful scenario: a framework where routine tasks, such as data analysis, are streamlined, offering a more efficient cognitive workflow. As we work towards this objective, it becomes crucial to approach conversational AI not merely as an innovative technology, but as an interface that aligns with human cognition. With careful development, it might provide nuanced pathways to facilitate our thinking processes, and hopefully unfurl new horizons of human cognitive prowess.